Kubernetes v1.33 In-Place Pod Resizing: Resize Pods Without Restarting

Kubernetes has always been about elasticity—scaling workloads up and down depending on demand. Until v1.33 (Octarine release), resizing a pod’s CPU or memory meant deleting and recreating it. This worked fine for stateless apps but caused headaches for stateful workloads like databases, caching layers, or long-running ML jobs.

Now with in-place pod resizing, Kubernetes finally allows changing resource requests and limits on running pods without restarting them. This is one of the most practical improvements in years, and it unlocks new flexibility for operators and developers.

In this blog, we’ll cover:

Why pod resizing is important

How the feature works internally

Step-by-step practical examples

Real-world use cases (databases, ML, CI/CD pipelines)

Limitations and best practices

Why Pod Resizing Matters

Before v1.33, if you wanted to increase memory or CPU for a pod:

kubectl edit deployment my-app

kubectl rollout restart deployment my-appThat meant:

Pod is terminated and a new one is created

Connections to the pod are dropped

Stateful workloads suffer downtime

Adjustments require careful rolling updates

For example, if a PostgreSQL pod ran out of memory, you couldn’t simply add more RAM. You had to restart it, which risked query failures and transaction issues.

With in-place pod resizing, Kubernetes lets you change pod resource requests/limits dynamically while keeping the same pod alive.

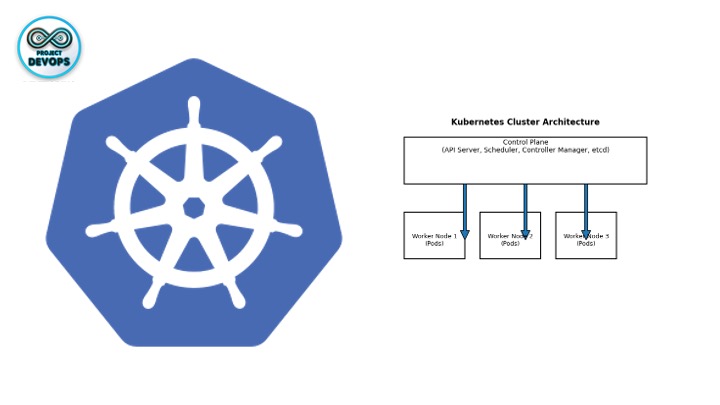

How In-Place Pod Resizing Works

The new feature introduces changes at multiple layers:

API Support

ThePod.spec.containers[].resources.requests/limitsfields can now be updated after pod creation.

Kubelet Enforcement

Kubelet applies updates by reconfiguring container cgroups directly (Linux kernel feature), instead of restarting containers.

Scheduler Awareness

The scheduler ensures the node can still satisfy the new resources. If not, the resize request is rejected.

No Restart

The pod keeps the same UID, IP, and process state. Your app continues running seamlessly.

Enabling the Feature

As of Kubernetes v1.33, in-place pod resizing is in beta. You need to enable the InPlacePodVerticalScaling feature gate.

Step 1: Enable Feature Gate

On all cluster components (kube-apiserver, kube-controller-manager, kube-scheduler, and kubelet), add:

--feature-gates="InPlacePodVerticalScaling=true"If you use managed Kubernetes (EKS, GKE, AKS), check provider documentation—many are enabling this by default in v1.33+.

Step-by-Step Example

Let’s see how this works in practice.

Step 1: Deploy a Demo Pod

demo-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: demo-pod

spec:

containers:

- name: demo-container

image: nginx:1.27

resources:

requests:

cpu: "100m"

memory: "128Mi"

limits:

cpu: "200m"

memory: "256Mi"Apply it:

kubectl apply -f demo-pod.yamlStep 2: Check Pod Status

kubectl get pod demo-pod -o wideYou’ll see it running with 100m CPU and 128Mi memory requests.

Step 3: Resize Pod Without Restart

Now, increase CPU and memory requests/limits:

kubectl patch pod demo-pod \

-p '{"spec":{"containers":[{"name":"demo-container","resources":{"requests":{"cpu":"300m","memory":"512Mi"},"limits":{"cpu":"500m","memory":"1Gi"}}}]}}'Step 4: Verify No Restart Happened

Check pod description:

kubectl describe pod demo-pod | grep -A5 "Limits"Output before resize:

Limits:

cpu: 200m

memory: 256Mi

Requests:

cpu: 100m

memory: 128MiOutput after resize:

Limits:

cpu: 500m

memory: 1Gi

Requests:

cpu: 300m

memory: 512MiNow confirm uptime didn’t reset:

kubectl get pod demo-pod -o jsonpath='{.status.startTime}'You’ll notice the pod start time hasn’t changed—same pod, more resources!

Example 2: StatefulSet Database

Here’s a PostgreSQL StatefulSet that benefits from in-place resizing.

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: postgres

spec:

serviceName: "postgres"

replicas: 1

selector:

matchLabels:

app: postgres

template:

metadata:

labels:

app: postgres

spec:

containers:

- name: postgres

image: postgres:16

resources:

requests:

cpu: "500m"

memory: "512Mi"

limits:

cpu: "1"

memory: "1Gi"

env:

- name: POSTGRES_PASSWORD

value: mypasswordIf queries spike and memory isn’t enough, you can patch:

kubectl patch pod postgres-0 \

-p '{"spec":{"containers":[{"name":"postgres","resources":{"requests":{"memory":"2Gi"},"limits":{"memory":"3Gi"}}}]}}'The database keeps running—no restart, no downtime.

Use Cases

Databases & Stateful Workloads

Increase memory during spikes without downtime.

ML/AI Jobs

Grant more GPU/CPU to training jobs mid-execution.

CI/CD Pipelines

Resize build pods dynamically based on workload size.

Cost Optimization

Start with low resources and scale up only when needed.

Limitations

Currently supports CPU and Memory only (GPU coming later).

Requires feature gate enabled.

Scheduler must validate node capacity—resize fails if not enough resources.

Still in beta: test thoroughly before production use.

Best Practices

Monitor resized pods with

kubectl top podor Prometheus.

Combine with Vertical Pod Autoscaler (VPA) for automation.

Don’t over-commit—resizing fails if node is full.

Use for stateful or critical workloads first.

Conclusion

With Kubernetes v1.33, resizing pods without restarting them is finally possible. This reduces downtime, improves flexibility, and makes resource management much smoother.

For workloads like databases, ML jobs, and CI/CD builds, in-place resizing is a game-changer. Expect this to become the new standard in future releases.

If you haven’t yet, upgrade your cluster to v1.33 and start experimenting—it will change how you manage resources forever.

Comments (0)

No comments yet. Be the first to share your thoughts!