The Ultimate Guide to Building AI Agents with GenAI and Agentic AI - from Local to Production

Artificial Intelligence is rapidly evolving from simple predictive models to autonomous, reasoning systems that can perform tasks, make decisions, and even collaborate with humans. This evolution is often described as Agentic AI — where Large Language Models (LLMs) don’t just generate responses but act as intelligent agents that plan, execute, and adapt.

If you’ve ever wondered how to build your own AI agent from scratch, train it with your own data, and deploy it into production, this guide is for you. We’ll go from basics to advanced, covering theory, tools, databases, deployment strategies, and real-world use cases.

1. What is an AI Agent?

An AI Agent is a software entity that:

Perceives: Understands input (text, voice, API, database).

Thinks: Uses reasoning or LLMs to decide what to do.

Acts: Calls tools, APIs, databases, or executes code.

Learns: Improves from feedback and history.

Unlike a simple chatbot, an agent can autonomously complete tasks.

Example: Instead of "What’s the weather in New York?" returning text, an agent would call a weather API, retrieve real data, and format a useful answer.

2. System Requirements (Before You Begin)

Depending on whether you want to run local models or use cloud APIs, system requirements differ.

For Local Development (using HuggingFace, Ollama, etc.)

OS: Ubuntu 22.04 / macOS / Windows 11

CPU: 8+ cores recommended

RAM: 16GB+

GPU (Optional but preferred): NVIDIA RTX 3060/3070+ (8–12GB VRAM)

Storage: 50GB free SSD

Python: 3.10+

For Cloud/Production (AWS/GCP/Azure)

t3.large (for lightweight agents, 2 vCPU, 8GB RAM)

g4dn.xlarge (for GPU inference with small models)

EKS/Kubernetes cluster for scaling multiple agents

Docker & Docker Compose for containerization

💡 If you’re experimenting, you can start with your laptop + OpenAI API and later scale to GPU servers.

3. Core Components of an Agentic AI System

To design an agent, you need the following layers:

LLM Engine

GPT, Gemini, Claude, LLaMA, Mistral, etc.

Provides reasoning & generation.

Memory

Short-term (conversation context).

Long-term (vector databases: Pinecone, FAISS, Weaviate).

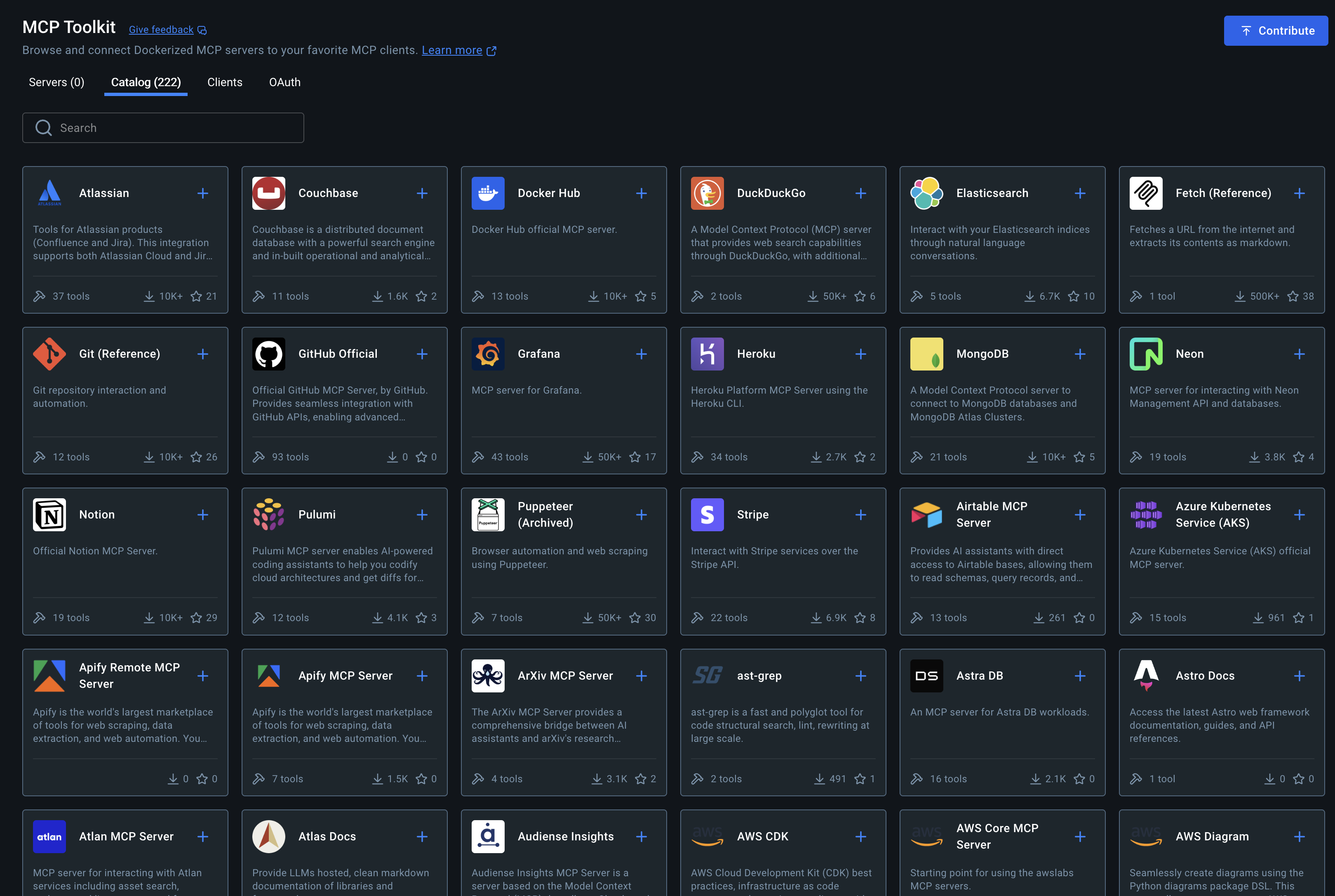

Tools & Plugins

APIs (weather, finance, flights).

Python/JS execution.

Database queries.

Planner

Breaks user request into steps.

E.g., LangChain agents, AutoGPT, CrewAI.

Feedback Loop

Self-evaluates & retries failed tasks.

4. Building Your First AI Agent (Step-by-Step)

We’ll start with a simple agent in Python that can search the web and summarize.

Install Dependencies

pip install langchain openai faiss-cpu requestsCode Example

from langchain.agents import initialize_agent, Tool

from langchain.llms import OpenAI

import requests

# Step 1: Define the LLM

llm = OpenAI(temperature=0)

# Step 2: Define a Tool

def search_web(query):

response = requests.get(f"https://api.duckduckgo.com/?q={query}&format=json")

return response.json().get("AbstractText", "No info found.")

tools = [

Tool(name="Web Search", func=search_web, description="Use for general search")

]

# Step 3: Initialize Agent

agent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)

# Step 4: Run

print(agent.run("Who is the CEO of Google?"))✅ This is a stateless agent. Next, we’ll add memory and databases.

5. Adding Memory (Short-Term & Long-Term)

Short-Term (Conversation Context)

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory(memory_key="chat_history")

agent_with_memory = initialize_agent(

tools, llm, agent="conversational-react-description",

memory=memory, verbose=True

)

agent_with_memory.run("Who is Sundar Pichai?")

agent_with_memory.run("What company does he work at?")Now the agent remembers past interactions.

Long-Term (Vector Database for Knowledge)

Install FAISS:

pip install faiss-cpuStore documents:

from langchain.vectorstores import FAISS

from langchain.embeddings.openai import OpenAIEmbeddings

docs = ["Sundar Pichai is CEO of Google.", "Satya Nadella is CEO of Microsoft."]

embedding = OpenAIEmbeddings()

db = FAISS.from_texts(docs, embedding)

query = "Who leads Google?"

docs = db.similarity_search(query)

print(docs[0].page_content)This lets the agent query a database of knowledge.

6. Training Agents with Your Own Data

If you want your agent to answer from your database (e.g., company policies, product docs):

Collect documents (PDFs, Word, CSV).

Use text splitter to chunk into paragraphs.

Convert chunks into embeddings using OpenAI / HuggingFace.

Store in vector DB (Pinecone, Weaviate, FAISS).

At query time → embed question → search DB → feed results into LLM.

This is called Retrieval-Augmented Generation (RAG).

7. Advanced Agents: Multi-Step Reasoning

Example:

“Book me a flight to Bangalore and then send details to my email.”

Steps:

Call flight API.

Extract booking info.

Call Gmail API to send mail.

Frameworks like LangChain Agents or CrewAI orchestrate this automatically.

8. Deploying Your Agent

Option 1: FastAPI (for REST API)

pip install fastapi uvicornfrom fastapi import FastAPI

app = FastAPI()

@app.post("/ask")

async def ask_agent(query: str):

return {"response": agent.run(query)}Run:

uvicorn main:app --reloadOption 2: Dockerize It

Dockerfile:

FROM python:3.10-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "8000"]Build & Run:

docker build -t ai-agent .

docker run -p 8000:8000 ai-agentOption 3: Deploy to Cloud

AWS ECS / Fargate – Serverless containers.

GCP Cloud Run – Auto-scaled containers.

Kubernetes – For large-scale agent orchestration.

9. Real-World Use Cases

Customer Support Agent – Queries DB + answers FAQs.

DevOps Agent – Monitors logs, restarts pods, applies fixes.

Research Agent – Scrapes papers, summarizes, generates reports.

E-commerce Agent – Updates stock, responds to customer queries.

Healthcare Agent – Suggests treatments based on clinical DB.

10. Best Practices for Agent Development

Guardrails → Restrict what tools the agent can access.

Evaluation → Use test prompts to measure reasoning.

Feedback Loops → Implement retries & self-correction.

Human-in-the-Loop → Keep humans for critical tasks.

Scalability → Use caching, embeddings, and GPUs.

11. Future of Agentic AI

Agentic AI will shift us from:

Static chatbots → Dynamic problem solvers.

Reactive AI → Proactive, autonomous assistants.

Single-task AI → Multi-task, multi-agent collaborations.

Imagine a DevOps Agent that monitors your Kubernetes cluster, applies patches, and optimizes scaling—before issues even occur.

Conclusion

We’ve gone from:

Basics (What is an agent?)

System setup (requirements, dependencies).

Building an agent (code examples).

Databases & memory (FAISS, Pinecone).

Training on your own data (RAG).

Deployment (FastAPI, Docker, Cloud).

Advanced use cases (multi-agent systems).

The journey to Agentic AI is about combining Generative AI with tools, memory, and planning. Start small, expand with databases, and finally deploy in production.

The future belongs to autonomous AI systems that don’t just think — they act.

Comments (0)

No comments yet. Be the first to share your thoughts!