Model Context Protocol (MCP): Standardizing How AI Models Connect with Tools and Data

The rapid rise of AI in 2023–2025 has created a new challenge: how do we let AI models safely and consistently talk to tools, APIs, and data sources?

Every company is building AI assistants, copilots, or automation workflows. But each integration tends to be custom, inconsistent, and often insecure. That’s where Model Context Protocol (MCP) comes in.

What is MCP?

MCP (Model Context Protocol) is an open standard that defines how AI models interact with external tools, APIs, and data sources.

It provides a common language and contract between:

Models → LLMs (like GPT, Claude, Gemini, LLaMA)

Tools → Databases, SaaS APIs, developer utilities

Clients → IDEs, CLIs, or custom apps where users interact

Think of MCP as the “Kubernetes for AI tool integration” — it standardizes communication so every model doesn’t need a bespoke connector.

Why MCP Matters

Interoperability

Instead of writing one-off connectors for each LLM, MCP lets you write once and run everywhere.

Security

MCP defines explicit permissions for tools, so models can only use what’s granted.

Transparency

Tools declare their capabilities (endpoints, functions, parameters), which the model can read before using.

Extensibility

New tools can be plugged into an MCP ecosystem without changing the model.

Developer Productivity

IDEs like VS Code can expose tools (e.g., Git, Docker, Jira) to models consistently, improving workflows.

MCP Architecture

MCP follows a client–server model:

Client → The environment where the user interacts (e.g., VS Code extension).

Server → A tool or data source exposed via MCP (e.g., a database, API, or custom script).

Model → The LLM that interprets user intent and invokes the right tools via MCP.

[User] → [Client (IDE/CLI)] ↔ [Model] ↔ [MCP Servers (Tools/APIs)]

Example Workflow

User asks: “Deploy my Docker container to staging.”

Model queries MCP for available tools.

Finds docker and kubernetes servers.

Invokes the right server with structured parameters.

Returns result to the user.

Core Concepts in MCP

Tool Definitions → Tools describe their inputs/outputs (like OpenAPI specs).

Schema Validation → Ensures the model’s requests match the tool’s contract.

Context Windows → Models can query MCP for metadata (like project configs) to improve grounding.

Execution Layer → Actual tool calls run on the server side, keeping the model sandboxed.

Example: Exposing a CLI Tool with MCP

Imagine you want to expose kubectl to your AI model via MCP.

{

"name": "kubernetes",

"description": "Manage Kubernetes clusters",

"commands": [

{

"name": "get_pods",

"description": "List pods in a namespace",

"parameters": {

"namespace": { "type": "string", "default": "default" }

}

},

{

"name": "apply_yaml",

"description": "Apply a YAML manifest",

"parameters": {

"file": { "type": "string" }

}

}

]

}

🔹 With this spec, any MCP-compliant model can now list pods or apply manifests safely.

Benefits for DevOps & AI Engineers

Safe Automation → Expose only controlled commands (no raw

rm -rf /).

Consistency → Whether you use GPT or Claude, the tool contract looks the same.

Composable Systems → Chain multiple tools (Git → Terraform → Kubernetes) in a single workflow.

Easier Maintenance → Update one MCP server, all models benefit.

Real-World Use Cases

AI in IDEs → VS Code exposing Git, Docker, and testing tools via MCP.

ChatOps → Slack bots using MCP to interact with Jenkins or AWS.

Data Analysis → LLMs querying databases with explicit schemas.

Multi-agent Systems → Agents sharing tools using the same protocol.

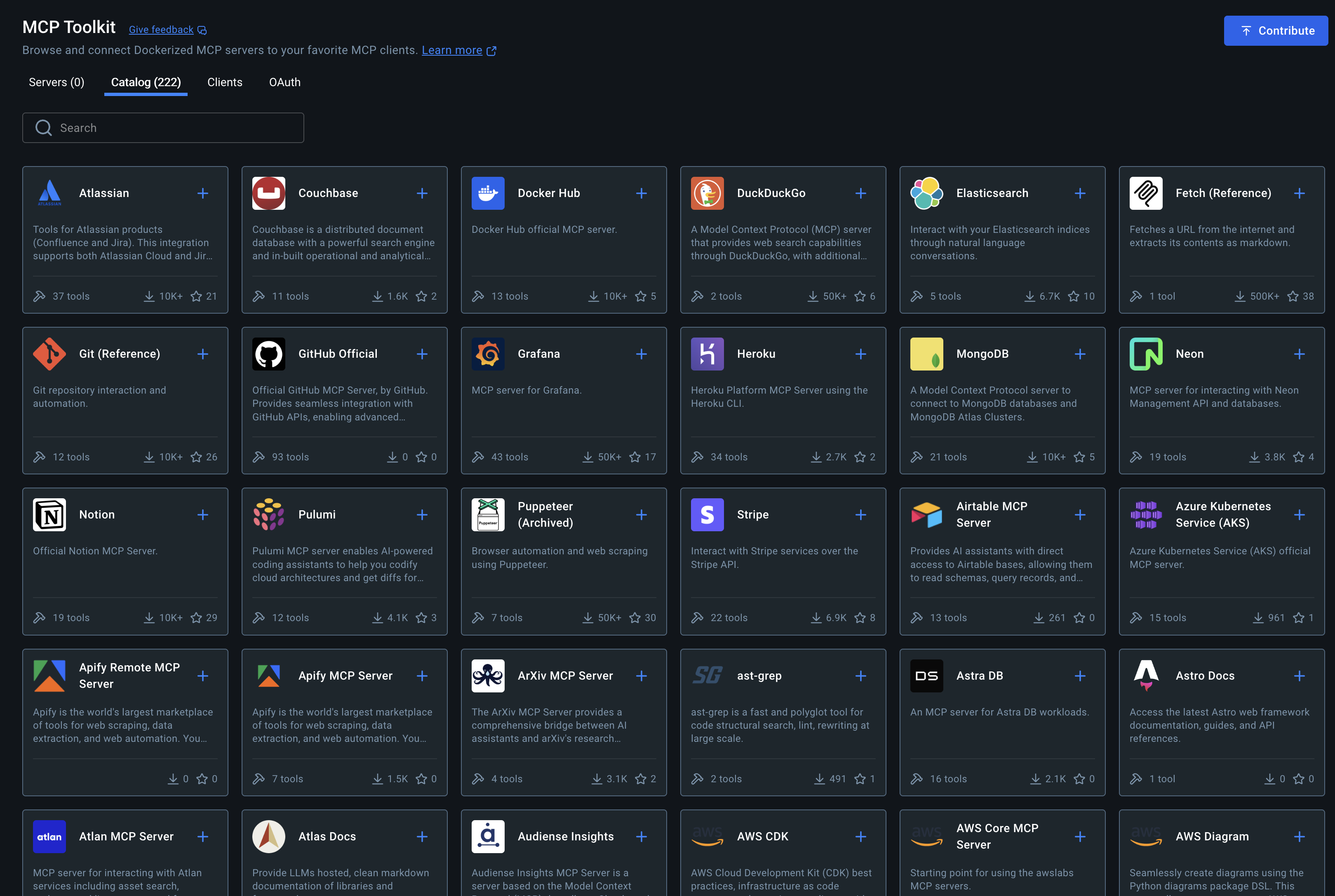

Getting Started with MCP

Define a Tool Spec → JSON/YAML describing functions & parameters.

Run an MCP Server → Expose tool commands over HTTP/WebSocket.

Connect a Model → Use an MCP-compatible client (OpenAI, Anthropic, etc.).

Grant Permissions → Explicitly approve which tools a model can use.

Final Thoughts

The Model Context Protocol (MCP) is emerging as a key enabler of safe, standardized AI integrations.

Just like Kubernetes standardized container orchestration, MCP is doing the same for AI–tool interactions.

For DevOps and platform engineers, this means:

You can expose infrastructure tools safely to LLMs.

You reduce custom glue code between AI models and APIs.

You gain security and consistency across multi-LLM environments.

As AI adoption accelerates, MCP is poised to become the backbone of enterprise AI-tooling. If you’re building AI-driven DevOps assistants or copilots, MCP should be on your radar.

Comments (0)

No comments yet. Be the first to share your thoughts!